Thinking Fast and Cheap

Faster CPUs enabled the last 50 years of computing. Faster LLMs will unlock the next 20.

The debate whether LLM intelligence is hitting a "wall"suffers from one-dimensional thinking. LLM quality exists along three axes: intelligence, cost, and speed. So long as models advance in speed and cost, decades of use-cases are possible with present intelligence.

The relevant analogy is CPU speed between 1970 and 2005, and the use cases unlocked at each breakpoint. Our programming tools didn't get any smarter, but accelerating from 2Mhz to 2000Mhz transformed our world. LLMs are a new computing architecture based on intelligence rather than boolean algebra – and we're still living in 1980.

A fast history of speed

In retrospect, most of the big tech companies during the clock speed regime appeared as soon as computationally possible:

35 years of compute-constrained startups shared common trends:

• Applications started as overnight batch processes, then became viable at 1-hour intervals, then minutes, then seconds

• Real-time processing (sub-second) was often the critical threshold for new business models

• Each generation enabled processing larger datasets in the same time window (e.g. Google vs Altavista)

An alternate history of speed

How well to do compute constraints explain this era of tech history? Consider, if you were a time traveler, which major companies could have been built before they were?1

Most big companies were founded within two years of being computationally possible. Longer gaps indicate businesses where the limiting reagent wasn't compute, but consumer trust and network effects. We could've had Airbnb in 1999 on Craigslist, but consumers weren’t culturally ready. Social networks like Facebook could've run in '95 (classmates.com) but launching among current Harvard students kicked off vital network effects. Slack was unusual – it could've run on decade-old CPUs, but there wasn't an acute business need to spur its adoption until distributed tech teams in the late 2000s.

The VC mantra seems to hold: "TAM, Team, and [Clock]Timing".

LLMs as a new computing architecture

The last six years of LLMs have mostly been unlocking use cases along the possibility frontier of intelligence and cost:

BERT Era (2018-2020)

Content classification

Sentiment analysis

Document similarity matching

GPT-3 Era (2020-2022)

Content moderation

Early customer service automation

ChatGPT/GPT-4 Era (Late 2022-Present)

Average-human-quality writing + content generation (ChatGPT)

Useful coding copilots (Cursor)

Answer engines (Perplexity)

Agents (llama 3.1/GPT-4o/Sonnet-3.5)

LLMs were only good enough for narrow domains – until suddenly (GPT 3.5) they were, leading to an explosion of uses.

The new speed regime: SLMs and ASICs

GPT-4 level intelligence was a critical quality breakpoint for pretty-reliable, clever-human-level intelligence, with most additional uses cases now gated behind cost and speed. For example, real-time contextual ad generation has been possible since 2023, but the best ads were 40x too expensive and 5x too slow to run in production.

Starting in July 2024, "small" language models (gpt-4o-mini, llama-3.1) become good enough for a lot more use cases. Faster inference unlocks many more use cases:

• Voice assistants need sub 500ms latency to feel like natural conversation - a breakpoint some frontier startups are just about to reach.

• Real-time video analysis still needs extremely specialized ML models. Fast multi-modal foundation models will enable a universe of applications (especially robotics) but we're still very early.

• Error checking. With enough time and inference, LLMs can improve their reliability by thinking step-by-step and checking their work. This is already the case for minute/hour scale deep queries in law and financial research.

We're in the early days of the ASIC era for LLMs. Groq, Cerebras, and Samba Nova enable llama 3.1 8B intelligence at 2000 tokens per second, but aren't available in significant commercial quantities. Google TPUs run Gemini at 200 TPS, but capacity is limited. Microsoft and Meta are both developing their own chips; Apple is positioned to take the lead on on-device inference.

The next 20 years of AI

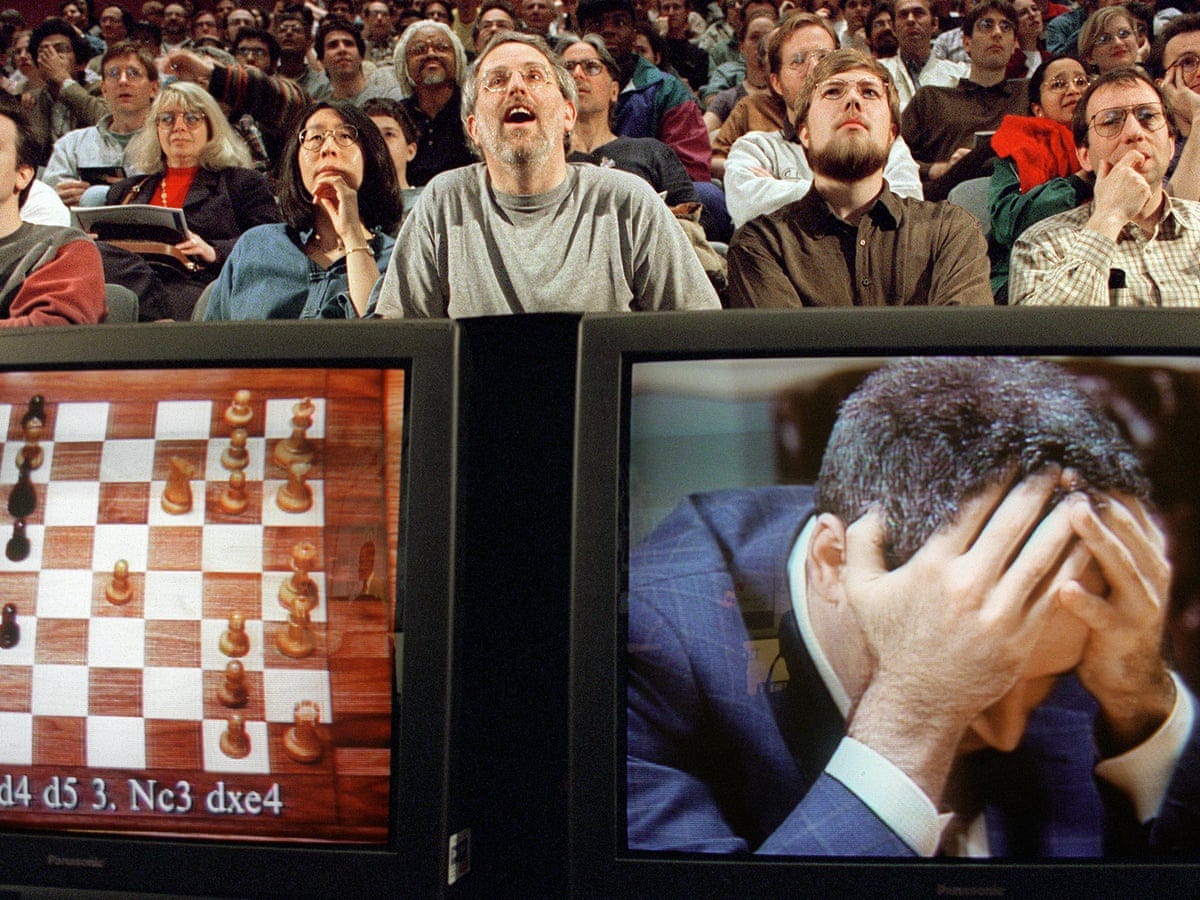

If I had a venture fund, I'd focus entirely on application-layer companies that leverage breakpoints in faster LLM inference. The term "agentic" is overhyped, but harnessing sequential and parallel chains of retrieval and reasoning is foundational to LLM computing architecture. OpenAI's o1 model is an example of using more inference to achieve more intelligent performance – much like a B-student with an open book and unlimited time can outperform an A-student on a test. DeepBlue wasn't "smarter" than Kasparov, but it achieved chess superintelligence by evaluating 200M positions per second.

Right now, it takes a lot of tricks to run a smart-enough LLM at high enough speed for frontier uses cases. You can jump half a generation ahead with knowledge distillation and quantization. This is where a good ML team (or good relations with compute providers) can win a company 6 months' lead on its competition.

Since I can only run one company as a founder, I’ve chosen advertising as my index bet on AI inference. Historically, advertising as functioned as a machine that transforms additional compute and data into money, via better ad targeting and relevance. AIs that essentially stop time and consider the ideal ad to show based on a user’s context, history, and preferences will have an unlimited appetite for compute.

Even if AI never gets smarter than Sonnet-3.5, superhuman speed is its own form of super-intelligence. But if it does, the smartest model will have a multiplicative effect on the intelligence of the systems it orchestrates.

In video game speed running terms, were there any opportunities to sequence break?